Unreasonably Effective, or Reasonably Ineffective?

I’ve been reading and re-reading some articles about machine learning and natural language processing (NLP). I’m no machine learning expert—I’ve spun up an instance of TensorFlow once and had no idea what I was doing with it—but I am interested in how computers deal with language and why it’s so weird, so I’m just going to write some of my thoughts and observations here.

First, here are some of the articles I’ve been looking at, two of which inspired the title of this post:

- The Unreasonable Ineffectiveness of Deep Learning in NLU, by Suman Deb Roy, from June 2017

- The Unreasonable Effectiveness of Recurrent Neural Networks, by Andrej Karpathy, from May 2015

- Deep neural networks are easily fooled: High confidence predictions for unrecognizable images, by Nguyen, Yosinski, and Clune, from 2015

Karpathy: Unreasonable Effectiveness

Andrej Karpathy’s 2015 article, “The Unreasonable Effectiveness of Recurrent Neural Networks”, is apparently sort of a classic, I guess because it was one the first accessible, readable (but also fairly technical) summaries of what’s actually going on when a recurrent neural network generates language. To simplify Karpathy’s explanation: the neural networks that produce such Shakespearian catastrophes as

They would be ruled after this chamber, and

my fair nues begun out of the fact, to be conveyed,

Whose noble souls I’ll have the heart of the wars.

are basically giant complex statistical programs that take as input a huge amount of text, look at the character-to-character sequences in the huge amount of text, do some fancy math, and spit out things that are statistically similar to the input according to a model that was produced during the “fancy math” stage. Of course, it’s all gibberish, but it’s mostly well-structured gibberish.

Obviously the output of these models is a bit goofy, but Karpathy is well aware of that and also provides a nice summary of the practical things that RNNs could do with language in 2015, like translation, speech-to-text, and image captioning. As far as I can tell, what he means by “unreasonable effectiveness” is something along the lines of, “isn’t it cool that this RNN using a character-level model can produce sentences that are semi-coherent?” I would add that the lines in the Shakespeare examples are surprisingly often in regular iambic pentameter, and that there is something surprising about how the RNN seems to have picked up some kind of idea of grammar. One of Noam Chomsky’s examples for why a “universal grammar” might exist is that the sentence “colorless green ideas sleep furiously” is perfectly grammatical even though it is meaningless. It feels like there’s something going on here where somebody who knows more than me could draw an interesting connection between machine learning language models and Chomsky.

Speaking of Chomsky, he recently did this interesting interview about Elon Musk’s Neuralink project, which I also wrote about.

(Also, one last note on Karpathy: he regularly uses the language of “knowing”, “understanding”, and “deciding” to talk about things that computers do, things that are really just statistical processes. This is troubling because it suggests that our knowing, understanding, and deciding are “really just” statistical processes. Does he realize he’s suggesting this?)

Deb Roy: Unreasonable Ineffectiveness

Suman Deb Roy’s recent article, “The Unreasonable Ineffectiveness of Deep Learning in NLU”, argues that deep learning (which is a thing you can do with RNNs, if I understand correctly) is actually quite bad at natural language understanding (NLU), despite over-hyped claims to the contrary. Deb Roy shows that “old fashioned” natural language processing (NLP) techniques (like n-gram and bag of words models) are generally better than or at least as good as deep learning techniques when it comes to tasks like assigning a topic to a given sentence.

Deb Roy seems to be saying that just when we thought that machine learning models were unreasonably effective, it turns out that there’s a specific set of natural language problems at which they are unreasonably not effective.

There’s a section in the article titled “Why is this Unreasonable?”, where Deb Roy admits that we expected more from new convolutional neural net models, since they are really pretty good at things like classifying images and video. We expected better performance for topic classifiers, and haven’t found it. He concludes,

Why this gap in performance between image/video/audio vs. language data? Perhaps it has to do with the patterns of biological signal processing required to “perceive” the former while the patterns of cultural context required to “comprehend” the latter? In any case, there is still much we have to learn about the intricacies of learning itself, especially with different forms of multimedia.

And I appreciate this honesty. Language is hard, and modelling it statistically doesn’t seem to get us where we want to go.

There’s an NLP paper from 2006 that includes the phrase, “language is a system of rare events, so varied and complex, that we can never model all possibilities”. If I suppress my copy-editor instincts and ignore the misplaced comma, I see this as an actually pretty insightful observation about why language is a “hard problem”, as they say. Language is an infinite space, and the ability to create and discern meaning within that infinite space is something we’ve barely begun to understand.

Unreasonably effective, or reasonably ineffective?

I don’t really understand whether there’s a conflict between these two articles by Karpathy and Deb Roy. I don’t think there is. I think Deb Roy is giving a fair account of the current state of technology in 2017 while, in contrast, Karpathy’s article is the AI-researcher equivalent of a frat boy building a riding lawn mower that can go 60 miles per hour—impressive, even if it’s not particularly inspiring. If I can come to any conclusion from my reading, it’s that neural networks applied to language for any task requiring semantic comprehension (beyond something like speech-to-text) are just a fun toy, with no real use. I know that there are a lot of big-dollar startups right now based on the idea that no, neural networks are the future of automated language which is the future of commerce, but I reserve the right to be cynical and cranky about them.

Deep neural networks are easily fooled

The final link in my list at the beginning of this post is just a paper from 2015 that shows humans can easily trick image-recognition models into thinking that images of static or abstract patterns are actually things like armadillos and assault rifles. Here’s an example, with the computer-generated label below each doctored image.

I’m just including this as a reminder that computer models intended to do human tasks can often be fragile and dumb. Fooling computers is incredibly easy, so I guess it’s a good thing that we don’t really think of it as lying?

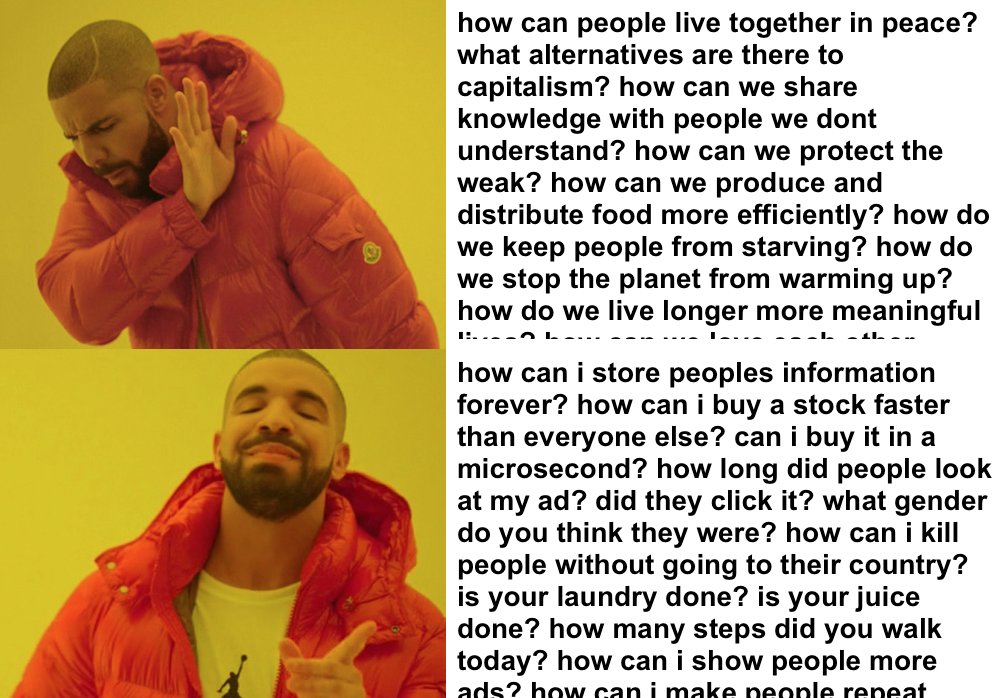

Just a thing from Twitter

One of my favorite Twitter accounts is @computerfact. This is from that account: